After the study that incited formaldegeddon, one suggesting a reduction in immune response for vapers and another confirming that the public is horribly misinformed about e-cigs, the issue of reliability in e-cigarette research is being brought into the limelight. So, in the manner of “A Rough Guide to Spotting Bad Science,” is there anything we should be on the lookout for when considering the findings of e-cigarette studies? Whether by incompetence or by design, there are many common problems with vaping related research, and particularly with the conclusions drawn from them in press releases and media reports, that any critical reader of the science should keep an eye out for. Of course, this isn’t an exhaustive guide to spotting flawed research (and many points are related to incorrect interpretations rather than the studies themselves), but it addresses some of the most common errors we’ve noticed from our coverage of the research, and ultimately through great blogs like the Rest of the Story, E-Cigarette Research, Anti-THR Lies, the Counterfactual, Velvet Glove, Iron Fist and Tobacco Truth. And although the studies with negative findings seem to fall foul of these mistakes more than ones with positive findings, it goes without saying that both sides of the debate are guilty from time to time.

1: Unrealistic Settings and Puffing Conditions

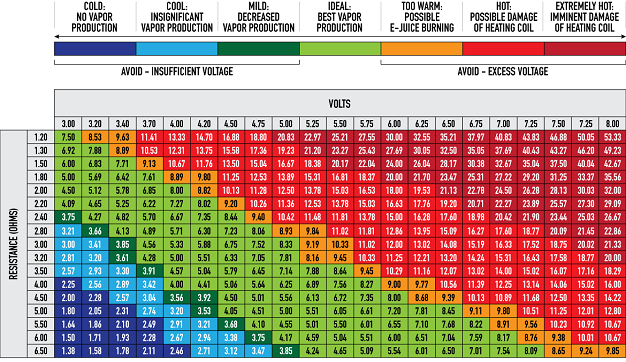

It might seem basic, but in light of recent results: if you’re studying e-cigarettes, and particularly their chemistry, the applied wattage and puffing schedule should be representative of what vapers use in the real world. Unlike the study that incited formaldegeddon, which used a basic top-coil clearomizer at a massively inappropriate 12 W, it’s only really useful to look at e-cig chemistry if it represents a situation vapers would actually find themselves in.

Other, very similar studies to have found high levels of aldehydes have fallen prey to the same error. Here, reading the methodology is vital, and if some crucial information is omitted (the recent formaldehyde study didn’t even provide the atomizer resistance in the original draft) then that’s a sure sign the researchers don’t really know what they’re doing.

2: Inferring Causation from Correlation

“Correlation does not imply causation” is an oft-repeated critique that’s a little more complicated than it may seem, since if there is causation, there’s probably correlation too. For example, studies that show reduced smoking rates as vaping increases are decent reason to think that more vaping is causing the reductions in smoking, but since it’s just a correlation we can’t be absolutely sure based on that alone. Statistician Edward Tufte suggests the following alternative version of the rule, “Correlation is not causation but it sure is a hint.”

The general “correlation is not causation” issue is pretty common in youth vaping studies. Cross-sectional surveys ask about e-cigarette use and smoking at one single point in time, and tend to find that almost all of the vapers are also smokers: in other words, there is a correlation between vaping and smoking. However, a surprising number of these studies are reported as if they show a “gateway” effect from e-cigarettes: as if the vaping caused the smoking. The same issue limits the surveys which looked at “smoking intentions” and e-cig use – among never-smokers there is a correlation between weaker anti-smoking intentions and having tried an e-cig (although there are other issues here too), but this was interpreted as e-cigs causing the weaker anti-smoking intentions.

3: Failing to Consider Obvious Possibilities

This is related to the last point. Often, correlations have two (or more) possible explanations, but researchers somehow decide to focus on one specific possibility. In the “smoking intentions” survey quoted above, the researchers focused on the “e-cigs weaken anti-smoking intentions” explanation, but completely ignored the possibility that existing weaker anti-smoking intentions make someone more likely to try vaping. In a situation like this, the natural question is: did the possibility not occur to the researchers, or was it ignored purposefully? It doesn’t necessarily mean there is an issue with the study itself – there could be a very good reason one possibility was discounted – but it does indicate that the conclusions drawn could be influenced by pre-existing biases. It’s better to acknowledge and discuss all possible explanations than focus on your pet theory.

4: Poor Definitions of “Current User”

Another trope of studies looking at youth e-cig use is that their chosen definition of a “current user” of e-cigs is often inadequate. When somebody says “current vaper,” do you imagine somebody that vapes every day or at least on most days? Or do you think vaping at least once in the past month is a sufficient definition? We’re guessing that almost anybody, when asked to describe a current vaper, would say daily use or on most days, but vaping at least once in the past month is widely used in such youth vaping research. This isn’t an issue, per se, but this detail is often missed in news reporting, and gives the impression that many recent experimenters are actually regular users.

5: Using Unrepresentative Samples

When you put together a group of participants for a study, the number one goal is that the group is representative of the population your finding applies to. This is the reason that studies recruiting vapers from forums and dedicated websites, which often find very high quit-rates, can’t be used to estimate the effectiveness of e-cigs for quitting smoking: dedicated vapers are not representative of the average smoker trying e-cigs. Nobody really tries to make these claims based on such studies, and this is why.

However, the equivalent claim is made based on findings which suggest e-cigs aren’t effective for quitting. The best example is a study that looked at quit-rates among smokers who had already tried e-cigarettes but then called a quit smoking hotline. Simply, those who’d already tried vaping but still needed to call the hotline are the ones who couldn’t successfully quit by vaping: it’s a subpopulation of the “tougher nuts to crack” or the smokers e-cigs simply aren’t well-suited to, and so it’s completely useless for estimating the effectiveness of e-cigs for quitting in general. Focusing on the failures, like this study, or focusing on the successes, like the user surveys, does not provide widely-applicable results.

6: No Full Peer Reviewed Study Published

While the peer review process is far from perfect, it’s obviously much harder to judge the reliability of findings if they haven’t even been through it, especially as a non-scientist. However, when findings are presented at a conference there is only ordinarily the abstract available, and these often miss key details. A great example of this is the “e-cigs may lead to lung cancer in high-risk individuals” study, which has the headline-grabbing, eye-catching conclusion, but sadly very little information about the method. In particular, it reported no effects at a “low” nicotine concentration (based on levels observed in the blood of vapers), but suggestions of cancer-like behavior at an unstated “high” concentration. Without further detail, there’s no way to know how much this applies to real-world use.

Similarly, a study claiming that “e-cigs harm the lungs” was also widely-disseminated despite no full, peer-reviewed study available. Does that make it wrong? No (although in these cases, it seems likely that they’re at least misleading), but there is no way to be sure because we don’t have enough information.

7: Cherry-Picking

Cherry-picking – selectively including results that you like and ignoring others – is widespread, but a particularly important issue when you’re supposed to be conducting a systematic review of the available evidence. The “systematic” nature of the review is supposed to guard against cherry-picking, but in some cases – like in a review from Rachel Grana, Neal Benowitz and Stanton Glantz – cherry-picking is still rife. This is why this particular review managed to conclude that e-cigs are ineffective for smoking cessation: they discarded many papers for no clear reason, and although they did include some with opposing conclusions, focused unduly on the ones supporting their pre-determined viewpoint.

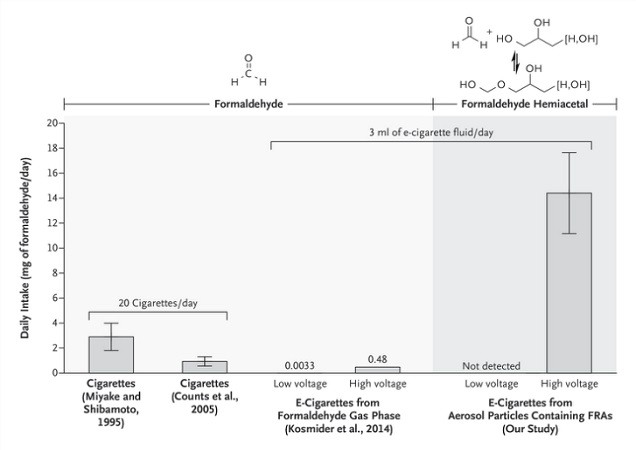

The formaldehyde study is guilty of cherry-picking in a different way. The high levels of formaldehyde hemiacetals were only found at the high voltage setting, with nothing detectable at the lower power used, but all of the subsequent calculations were only based on the high voltage figure. Had they calculated formaldehyde-related cancer risk for the lower voltage (taking the limit of detection as the maximum possible), or even averaged the two, the conclusions would have been very different, but cherry-picking allowed them to focus on the extreme case to get their desired result.

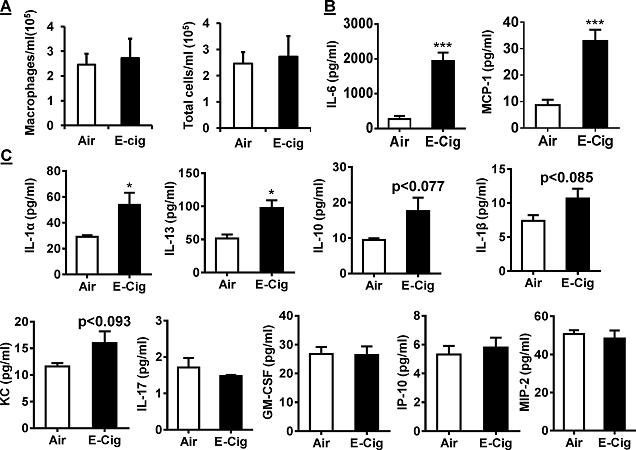

8: No Comparison with Cigarettes

E-cigarettes are an alternative to cigarette smoking, so in research addressing their safety, it’s logical to include a comparison with cigarettes. However, the results of such studies tend to be that e-cigarettes are much, much safer, so the findings aren’t particularly newsworthy. If a study lacks such a comparison with cigarettes, it doesn’t mean that the result isn’t right – for example, if e-cigs lead to lung inflammation, that fact doesn’t depend on whether cigarettes do too – but it does mean a crucial piece of information is missing. Without the comparison, most vapers – for whom the choice is between smoking and vaping rather than between vaping and nothing – won’t have anything concrete to base decisions on, and some may even get the impression that e-cigs might be as dangerous as smoking.

Breathing in vapor is obviously worse for you than breathing in air, and framing research as a comparison between the two – although it doesn’t impact on the validity of the findings for e-cigarettes – means you can make e-cigarettes look bad rather than focusing on the crucial factor of the reduction in harm in comparison to smoking. If a study doesn’t offer a comparison, it should provide a very good explanation of why not, and whether it does or doesn’t, it’s clear that some vital information is being bypassed and you aren’t getting the full story.

9: Tiny Samples

This is a simple one: if you don’t have many participants, then your findings are less likely to represent the general population and chance statistical fluctuations are more likely to play a significant role. This is more of a notable issue in studies on the effectiveness of e-cigs for quitting, and many examples come from positive findings: for example, a study showing that e-cigs may be effective for helping schizophrenic smokers quit only had 14 participants, and another prospective study with positive findings only had 40 participants. This doesn’t mean the findings are wrong, just that larger samples are needed to confirm the conclusions.

10: The Funding Source

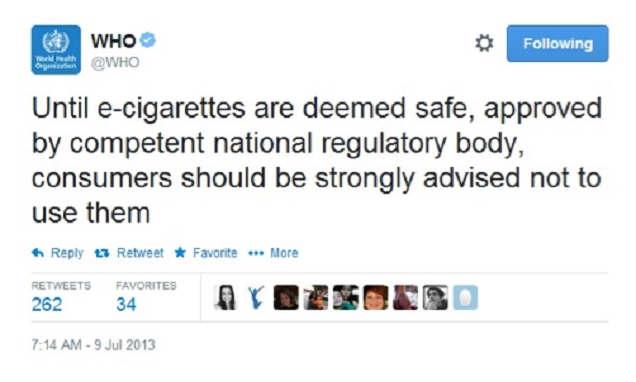

Again, this isn’t a hard-and-fast rule, but generally it makes sense to look at funding and conflicts of interest when considering the reliability of a study. It’s better to critique based on the methodology, but if you notice that a study is funded by an e-cig company or advocacy groups – like one on second-hand vaping funded by the National Vapers Club – or a group with an axe to grind – like the systematic review mentioned earlier, funded by the World Health Organization – then it’s often prudent to be a little more critical than usual. The methodology and findings could well be robust, but if anybody is going to mislead, groups with obvious biases are among the most likely culprits. This is all the more problematic – and difficult to find out – when conflicting interests aren’t disclosed.

Conclusion – Spotting Bad Science Isn’t Easy

It’s worth emphasizing that the presence of one or more of these issues doesn’t mean you can disregard the findings completely: it’s more that things like this introduce caveats, and these caveats often directly contradict how such findings are disseminated. Lots of formaldehyde hemiacetals in unrealistic vaping scenarios quickly becomes “e-cigs release more formaldehyde than cigarettes” when crammed into headlines. With these points to look out for, it should be easier to see through poor reporting or even pick holes in the studies themselves, but you should be both cautious and even-handed (approach studies whose conclusions you like just as critically as ones you don’t). Luckily for vapers, however, the science is generally on our side.